The Web is Bloated, and It's Our Fault

| 4 minutes read

Early in my career, fresh out of university, I had a boss who was a big advocate for old-school web development. He loved Java and JSF, focusing on server-rendered pages that sent as little data as possible over the wire. At the time, I was deep into the new world of client-rendered applications. React was the new hotness, and I was all in. I argued for beautiful, animated, and highly interactive Single-Page Applications (SPAs).

We had many some fun discussions. He’d argue that a lean, server-rendered approach was superior, especially for users with poor connectivity. He wanted applications to be usable on his train rides, where the notoriously spotty internet of the German railway system made data-heavy apps unusable. I, on the other hand, was convinced that the future was client-side, with rich interfaces and seamless transitions.

In a way, we were both right. Time has shown that the industry has swung back and forth. React eventually introduced server-rendered components, and the concept of SSR (Server-Side Rendering) became mainstream again. We aimed for the best of both worlds: a fast initial render from the server, with the client taking over for subsequent updates.

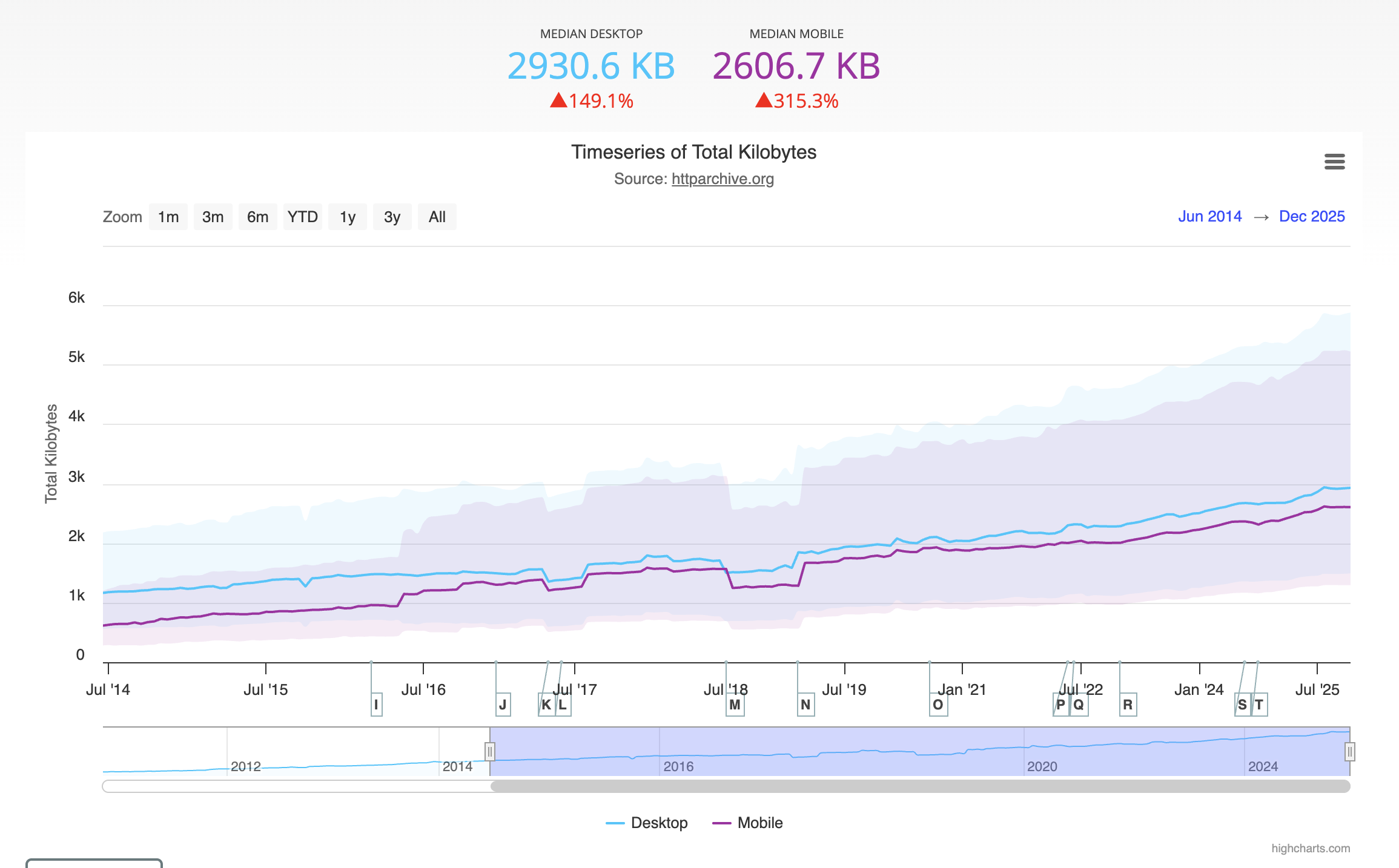

But a funny thing happened. Even with these optimizations, web pages kept getting bigger. The internet infrastructure improved, and we, as developers, got lazy. We started shipping massive JavaScript bundles, high-resolution images, and countless third-party scripts. We took fast internet for granted.

I remember when WhatsApp first came out. You could send a message on a 2G (EDGE) connection. Today, if my phone drops to 3G, many modern apps, including WhatsApp, struggle to function. We’ve created a web that demands a fast, stable connection.

This reality hit me hard recently when a widespread power outage, caused by an attack on the local infrastructure, left my entire area without electricity for nearly a week. With the power grid down, the cellular network was the only link to the outside world. But with everyone in the area relying on it, the towers few towers still in action were overwhelmed. The connection was painfully slow, fluctuating between non-existent and a barely-there EDGE signal.

I couldn’t send a simple message or load any news websites. The government sent out emergency updates with links to informational pages, but these pages were impossible to load on my crippled connection. The normal news pages like rbb or morningpost had an amazing good update cycle, but as well, full of ads and super slow on this network, so it timed out most of the time. It was a frustrating and eye-opening experience. In a crisis, when information is most critical, the modern web failed me.

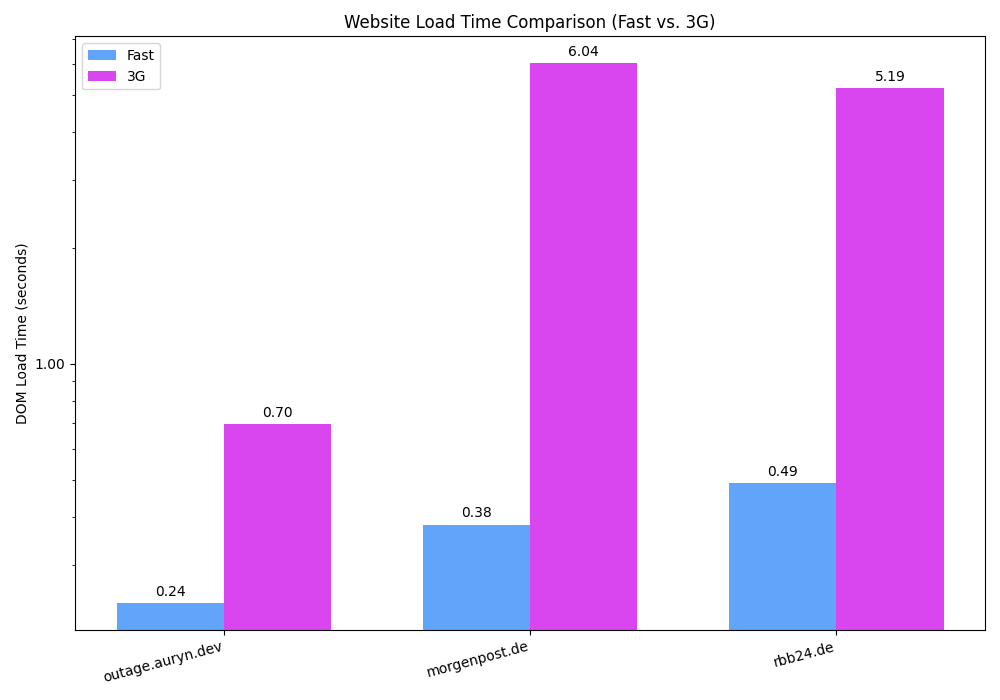

| Website | Network Profile | Page Size (MB) | Resources | DOM Load (s) |

|---|---|---|---|---|

| rbb24.de | Fast | 1.00 | 82 | 0.38 |

| rbb24.de | 3g | 1.01 | 82 | 5.18 |

| outage.auryn.dev | Fast | 0.09 | 71 | 0.18 |

| outage.auryn.dev | 3g | 0.09 | 70 | 0.61 |

| morgenpost.de | Fast | 0.48 | 83 | 0.21 |

| morgenpost.de | 3g | 0.48 | 83 | 6.02 |

The table above paints a stark picture. On a fast connection, all sites load in under a second.

On a simulated 3G network, however, the news sites slow to a crawl, taking over 5 and 6 seconds to become interactive.

My simple aggregator, outage.auryn.dev, loaded in just 0.61 seconds on the same 3G network.

That’s a nearly 10x improvement, and it’s the difference between getting critical information and being left in the dark.

This isn’t just a technical metric; it’s a measure of accessibility.

Driven by necessity, I spent an evening at the office (which had power) coding and vibing a solution. I built a small news aggregator using Elixir and Phoenix LiveView, specifically designed to be incredibly lightweight. It fetched news from various sources and pushed only the text content over a WebSocket. The entire page load was under 1MB, and subsequent updates were just a few kilobytes. The connection would automatically refresh if it dropped, ensuring I only ever downloaded the missing information.

The code was/is messy, a classic “vibe-coded” project born out of urgency, but it worked. I was able to stay informed about the repair status and share updates with my family. It was super helpful just to see the latest news. After the power was restored, I shut it down.

This experience was a powerful reminder of my old boss’s wisdom. He was optimizing for the right thing: resilience and accessibility. (Right thing still depends of the customer here. News pages must make money)

As engineers, we have a responsibility to build a web that works for everyone, not just those with the latest hardware and the fastest internet. We can’t take connectivity for granted. If you’re building something, especially if it delivers critical information, consider your users in less-than-ideal situations.

The web is a powerful tool, but its power is diminished if it’s not accessible to everyone. Let’s build a better, more resilient web.

Thank you for reading.

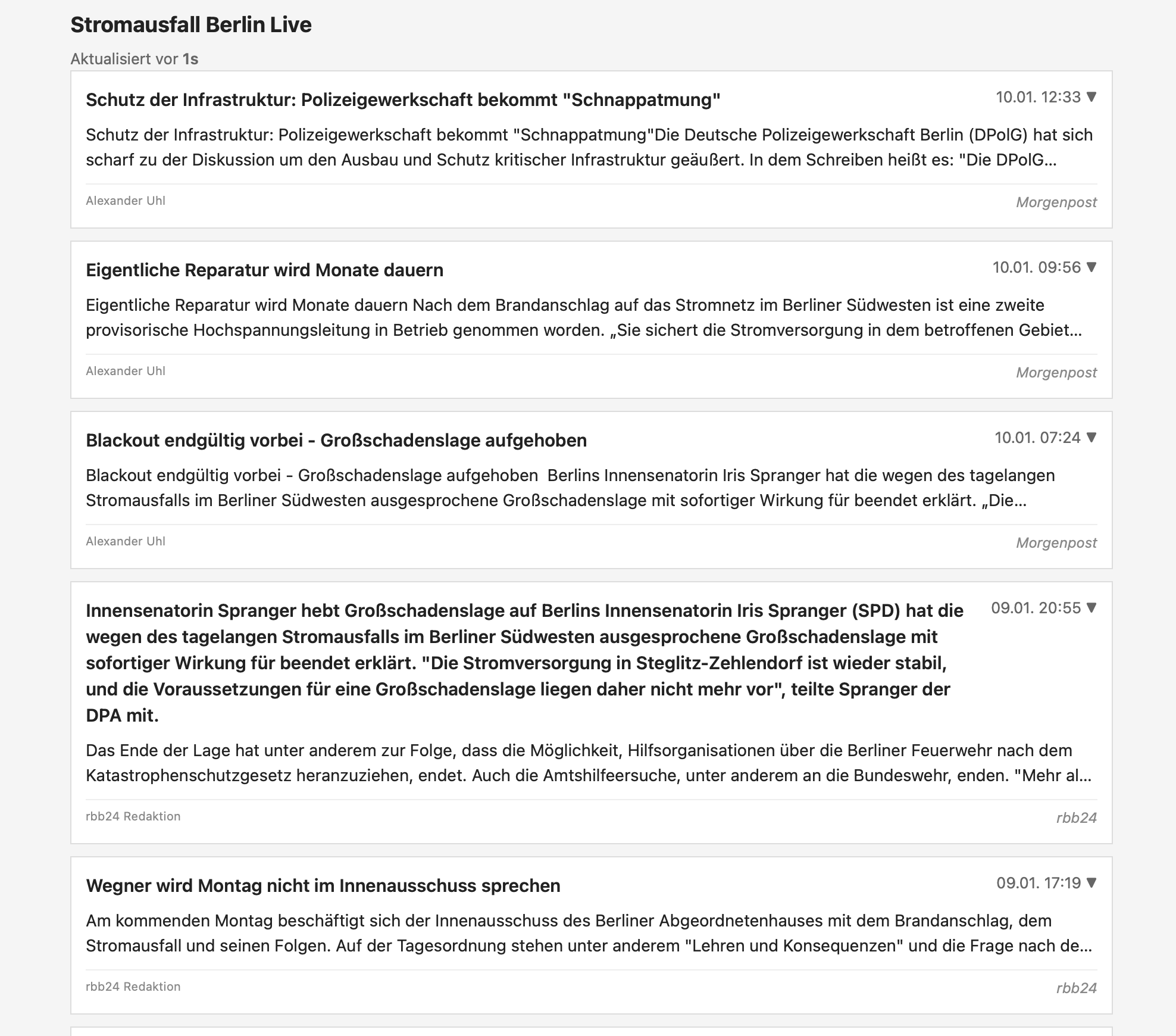

And as promised, there is the result of my vibecoded news page to show me the latest updates while the power outage lasted: